Is Generative AI Sustainable?

Artificial intelligence (AI) has a range of benefits across a wide variety of sectors, from financial markets, healthcare, and education to engineering, manufacturing, and even the arts, but are we overlooking generative AI’s hidden energy costs?

Artificial intelligence (AI) is all the rage these days — and it’s everywhere. From content generation, real-time translation, and marketing to DNA sequence analysis, self-driving cars, image recognition, video processing, and object detection, AI has become an inescapable (even indispensable?) part of our lives. Some tech evangelists even claim that AI will help humanity solve many of its most pressing challenges, such as alleviating food security and poverty as well as combatting the effects of climate change.

But in our haste to adopt an apparent technological silver bullet for many of our existential problems, are we overlooking one key point in particular, namely generative AI’s energy thirstiness? Every part of the AI process requires significant energy, but how many of us are actually aware of its carbon footprint? What are the energy requirements of creating the materials needed for the large-scale application of machine learning and artificial intelligence?

Obviously, it’s beyond the scope of the current article to consider the entirety of AI’s supply chain or lifecycle (i.e., from material sourcing to research collaboration and commercial application). In an effort to look beyond the hype, though, we will try to lift the curtain in search of some answers.

Artificial Intelligence and Energy-Intensive Computing

It takes a great deal of energy to learn. Until recently, few people outside of research labs were using artificial intelligence, but the release ChatGPT in November 2022 changed everything. Today there are hundreds of millions, if not billions, of daily queries on ChatGPT, all of which need energy to process.

A 2019 article in MIT Technology Review, for example, noted that training a single AI model can emit as much carbon as five average American cars in their lifetimes (old red, white and blue isn’t exactly known for being green) — with the cost of manufacturing the cars included. The article was based largely on a scientific paper in which researchers from the University of Massachusetts, Amherst performed a life cycle assessment for training large AI models. The results were eye-opening: the process emitted the equivalent of more than 626,000 pounds of carbon dioxide.

In the same year, researchers estimated that creating Google’s generative AI model called BERT with 110 million parameters consumed the energy of a round-trip transcontinental flight for one person. In 2021, researchers from the University of California, Berkeley in collaboration with a team from Google estimated that training GPT-3 consumed up to 1,287 megawatt-hours of electricity, which translates into enough electricity to power 360 homes for an entire year.

And if you think that this sounds bad (it does), we’re only really considering text generation at this point. The energy consumption picture is significantly worse when dealing with image and video generation.

Querying ChatGPT about Its Energy Consumption

In light of the above-referenced numbers, this section feels slightly guilt-inducing. To make matters worse, querying ChatGPT-3 about its energy use didn’t result in any definitive answers (surprise, surprise). We asked the following:

“What is the estimated carbon footprint for ChatGPT to answer this query?”

And it answered in vaguely informative ChatGPT-ese (recognisably plausible but with little actual content). The answer also included what pop psychologists might refer to as deflection.

“Estimating the carbon footprint of a specific query answered by ChatGPT is a complex task that depends on several factors, including the hardware used to run the model, the energy source for that hardware, and the duration of computation. OpenAI, the organization behind GPT-3, has not publicly disclosed the exact carbon footprint of individual queries answered by their models as of my last knowledge update in September 2021.”

Right, that clarifies things. In order to atone for such a profligate use of artificial intelligence, we might have to cycle to work for a week (unless it’s raining — then all bets are off). And speaking of hardware…

Processing the AI Revolution: CPUs, GPUs, and TPUs

A recent article in the American online magazine Slate (“Silicon Valley’s Favorite New Toy Has a Risky Tradeoff”) argued that we collectively suffer from tunnel vision when it comes to “resource-intensive (and resource-lacking) power-sucking content generators like ChatGPT, as well as the resource-intensive power-sucking data sets, neural networks, and large language models.” (Tell us what you really think.)

Processing large datasets and creating neural networks and large language models (LLMs) would be impossible without cutting-edge hardware. As the Slate article reminds us, ChatGPT co-founder Sam Altman testified before Congress, indicating that he would have preferred fewer users given OpenAI’s shortage of GPUs (graphics processing units). Similar to other types of computing, AI necessarily has specific hardware requirements (e.g., CPUs, GPUs, TPUs, memory, storage), but those requirements can exceed what is typically needed by other processes and applications.

In something of a truism, the more powerful the AI, the more energy that it needs. During AI’s training phase, developers and researchers multiply extremely large matrices by each other. However, the increasing sophistication of deep learning and AI means that these datasets are becoming exponentially larger. As AI researcher Roy Schwarz explained,

“We’ve seen that a couple of years ago, the largest system was trained on an order of magnitude of maybe several millions of words. And then it became tens of millions or hundreds of millions. And now the system is trained on 40 billion words, so it just takes more and more time. And we’re growing in every possible dimension. Larger matrices that are being multiplied and take a lot of time and energy, and this process is being repeated 40 billion times.”

If you’ve trained for sport or to learn to play a musical instrument, you know that it isn’t something that you do only once — training is by definition repetitive. The same holds for AI in which experiments are run multiple times in order to achieve better results, only magnifying the energy costs. As a consequence, the more energy being used by machine learning and artificial intelligence, the greater the carbon footprint (unless, of course, it is being offset by renewables, among other things).

In fact, these models have grown to such an extent that hundreds of thousands of processors are being used, not only to train the models (a process referred to as “inference”) but also to support the billions of daily queries by users. Microsoft, for example, partnered with OpenAI to build a so-called “AI Supercomputer” running in the Azure cloud. So what, you say? The computer contained over 285,000 processor cores and 10,000 graphics cards! Not to be outdone, NVIDIA’S H100 GPU includes 80 billion transistors.

AI and Data Centres: Bad for the Environment?

Large datasets require large data centres to store and process all of that data. Large data centres require large amounts of energy to run. And just like an internal combustion engine, data centres generate a significant amount of heat, which needs to be dissipated in order to avoid overheating and mechanical failure.

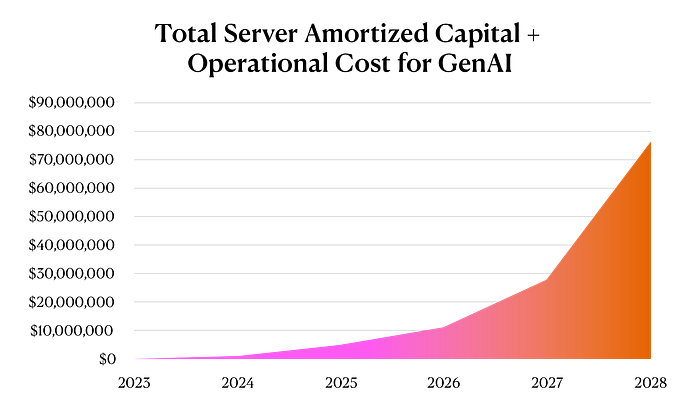

Part of the AI equation involves data scraping, or casting as wide a digital net across the internet as possible in order to collect as much information, which is then stored in what are referred to as “hyperscale” data centres, which are typically located in large cities or regions where there is cold weather to cool them naturally. However, having to run, maintain, and cool these data centres 24/7/365 emits hundreds of metric tonnes of carbon emissions, and not all data centres enjoy the advantage of being positioned in a cold climate. As Forbes has reported, the tech analysis research firm TIRIAS Research predicts that 2028 data centre power consumption will hover around 4,250 megawatts, or roughly a 212X increase from 2023.

And while size matters, there are other predictors of carbon emissions. Although similar in size to ChatGPT-3, France’s open-access BLOOM has a far lower carbon footprint, consuming 433 MWh of electricity whilst generating 30 tonnes of CO2eq. Google researchers think that they can do one better by optimising model architecture and processor(s) along with a greener data centre, which they say can yield a carbon footprint reduction between 100–1,000 times.

Down the Drain: Microsoft, Meta, and AI’s Hidden Water Consumption

According to a team of researchers, OpenAI’s AI chatbot ChatGPT downs 500ml of water for every 5–50 prompts it answers.

In their paper “Making AI Less “Thirsty”: Uncovering and Addressing the Secret Water Footprint of AI Models,” the team found that “training GPT-3 in Microsoft’s state-of-the-art U.S. data centers can directly consume 700,000 liters of clean freshwater (enough for producing 370 BMW cars or 320 Tesla electric vehicles) and the water consumption would have been tripled if training were done in Microsoft’s Asian data centers, but such information has been kept as a secret.”

According to Microsoft’s 2022 Environmental Sustainability Report, the company’s global water consumption surged by more than a third from 2021 to 2022, largely due to its heavy investment in AI. In real-world terms, it’s equivalent to filling more than 2,500 Olympic-sized swimming pools with nearly 1.7 billion gallons of water.

One of the answers to the burning issue of data centre heat is immersion cooling (or liquid cooling), which rather counterintuitively can actually save more water than other forms of cooling such as air. In fact, liquid is up to 1,000X more effective than air as a heat conductor, and it also requires a much smaller infrastructure. This might be one of the reasons why recent reports have indicated that Meta plans to shift to liquid cooling in their AI-centric data centre, making more extensive use of liquid cooling as it continues to invest in an AI-dominated future.

Augmented Intelligence: AI’s Greener Cousin?

So, is it all doom and gloom? Are we wasting yet another one of the earth’s precious resources in our thirst for knowledge and innovation? Well, yes and no — yes in the sense that increasingly worrisome amounts of water are being used (and will continue to be used as the technology scales), but no in the sense that humanity has proven itself time and again to be resourceful.

For example, GPUs are becoming more energy efficient, with each new generation of processors carrying out more computations using less power. Nvidia’s NVIDIA H100 is said to be 10% more energy efficient that its predecessor. And, as we’ve just read, Microsoft and Meta are aware of the problem. More importantly, they’re taking concrete measures such as liquid cooling to combat it. And there are other efficiency gains to be had across the AI ecosystem, from improvements in neural-network architecture to sourcing the electricity used for computations from renewable sources such as solar and wind.

Another piece of the puzzle might also be augmented intelligence (sometimes referred to as intelligence amplification). You might remember that we referred to data scraping, which, in its non-targeted approach, is similar to what is referred to as brute force analytics (this is often use in crypto, too).

Let’s take a marine analogy. We all know that trawling the ocean floor causes immense destruction because of its indiscriminate nature. Similarly, training AI by running hundreds of thousands or even millions of models simply to see what works best is costly and wasteful. A more targeted approach would be to craft experiments that avoid this type of brute force approach.

As we can see, augmented intelligence is human-centric, with AI and machine learning working in the service of humanity based on human judgement and human decision-making, which is precisely the exact opposite of singularity in which technology such as artificial intelligence exceeds our control. Instead of watching from the sidelines, human intelligence plays centre stage, with artificial intelligence augmenting or complementing it — technology that benefits us without the harm.

Applying AI More Efficiently Across Sectors and Industries

If we step back momentarily, what we are asking is not whether artificial intelligence carries a range of benefits (it does) or whether we should restrict its applications (we shouldn’t), but rather if we shouldn’t be more strategic in our use of it. With every new technology comes a certain degree of novelty and curiosity, and generative AI is no exception. We are right to explore the power of emergent technologies in order to better understand them, but we should also be aware of questions concerning their sustainability and environmental impact.

As Euronews reported recently, “if Google were to use AI for its around 9 billion daily searches, it would need 29.2 terrawatt hours (TWh) of power each year — the equivalent to the annual electricity consumption of Ireland.” Given the energy costs of generative AI, should we be using it for every Google or Bing search, or to generate text for our LinkedIn profiles, or to answer mundane queries that could otherwise have been answered with a few minutes of research? Jevons paradox tells us that the increasing efficiency and availability of tools such as AI will necessarily result in more people using them — and consequently greater energy demands.

A Smarter Approach to Artificial Intelligence

Wouldn’t it be far better to support the AI ecosystem where it can make the most difference in people’s lives, shifting the conversation away from novelty in favour of impact? Such a discussion would underscore how farmers are sowing the seeds of agricultural sustainability and increased efficiency by using AI to collect insights about soil health, or to monitor weather conditions, or to apply fertilizers more effectively.

AI and climate change — the combination offers a range of tantalizing possibilities. Net zero, the shift towards digitalisation, and decarbonisation efforts are all made exponentially easier by harnessing the power of machine learning and artificial intelligence to gather, analyse and interpret large, complex datasets, which in turn allows for greener, data-driven decisions. As the London School of Economics explained in an article,

“AI can be used in climate research and modelling, in financial markets (e.g. to forecast carbon prices ), and in education and behavioural change, (e.g. using data on behavioural patterns to ‘nudge’ people to choose a more sustainable option, or to calculate the carbon footprint of products to help individuals make informed decisions).”

And we haven’t even touched on things such as energy grid optimisation, hazard warning forecasting, carbon removal, and the use of satellite imagery in conjunction with AI to support and increase biodiversity here on earth. Once we go AI, apparently, we never go back.

We would also need to talk about how AI is being used in the service of digitalising healthcare to provide better patient care and overall patient outcomes. From streamlined data collection and processing to optimising treatment plans and even detecting and diagnosing cancerous cells that at first might go unnoticed by doctors, artificial intelligence can help us to see (quite literally in some cases) diseases and their treatments in new ways, the implications of which are immense for the future of humanity’s collective health.

And the same can be said across a range of sectors, including finance, manufacturing, logistics, and space (among many others).

Final Words on AI’s Energy Use

The final word on artificial intelligence’s energy consumption and carbon footprint is, of course, that there are no final words. With AI technology and hardware constantly evolving, often in conjunction with socio-economic and geopolitical realities, the conversation and indeed the goalposts will constantly shift.

If anything, we’d love to hear from stakeholders involved in the AI space.

· Are you a venture capital manager funding and supporting innovative AI-centric SMEs as they look to bring their products to the market and scale?

· Are you a developer or data scientist at the forefront of the industry with thoughts and insights about the state of machine learning algorithms and natural language processing in terms of their energy use?

· Are you an entrepreneur looking to capitalise on this emergent tech to create AI-based products or services to address the needs of industry or humanity?

· Are you a researcher, academic, or AI ethicist with a focus on artificial intelligence and its environmental impact?

· Are you an AI service provider (Sam Altman, we’re looking at you)?

· Or are you simply a casual user of AI?

Share your thoughts in the comments section below!